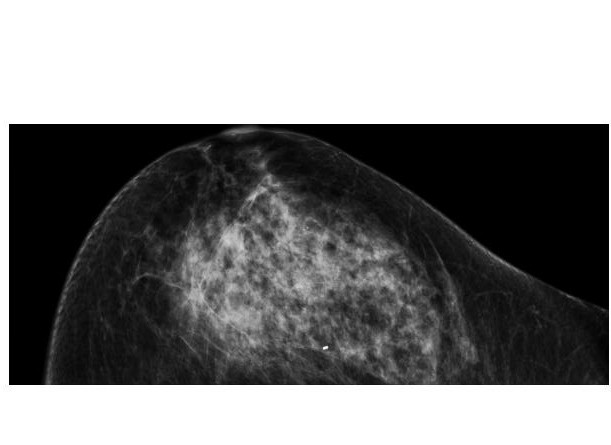

Artificial intelligence (AI) software that reliably interprets mammograms so doctors receive a quick and accurate prediction of breast cancer risk has been developed by researchers at Houston Methodist Hospital.

It intuitively translates patient charts into diagnostic information at 30 times human speed and with 99 percent accuracy. The Houston Methodist team hopes this artificial intelligence software will help physicians better define the percent risk requiring a biopsy, equipping doctors with a tool to decrease unnecessary breast biopsies.

In the United States, 12.1 million mammograms are performed annually, according to the U.S. Centers for Disease Control and Prevention (CDC). Fifty percent yield false positive results, according to the American Cancer Society (ACS), resulting in one in every two healthy women told they have cancer.

Over 1.6 million breast biopsies are performed annually in the United States, and about 20 percent are unnecessarily performed due to false-positive mammogram results of cancer free breasts, estimates the ACS.

Currently, when mammograms fall into the suspicious category, a broad range of 3 to 95 percent cancer risk, patients are recommended for biopsies.

"This software intelligently reviews millions of records in a short amount of time, enabling us to determine breast cancer risk more efficiently using a patient's mammogram. This has the potential to decrease unnecessary biopsies," said Stephen T. Wong, Ph.D., P.E., chair of the Department of Systems Medicine and Bioengineering at Houston Methodist Research Institute.

The team led by Wong and Jenny C. Chang, M.D., director of the Houston Methodist Cancer Center used the AI software to evaluate mammograms and pathology reports of 500 breast cancer patients. The software scanned patient charts, collected diagnostic features and correlated mammogram findings with breast cancer subtype.

Clinicians used results like the expression of tumor proteins to accurately predict each patient's probability of breast cancer diagnosis.

Manual review of 50 charts took two clinicians 50-70 hours. AI reviewed 500 charts in a few hours, saving over 500 physician hours.

"Accurate review of this many charts would be practically impossible without AI," said Wong.

Co-authors of the study included Tajel Patel, M.D., Mamta Puppala, Richard Ogunti, M.D., Joe. E. Ensor, Ph.D., Tian Cheng He, Ph.D., and Angel A. Rodriguez, M.D. (Houston Methodist); Jitesh B. Shewale, Ph.D. (University of Texas School of Public Health); and Donna P. Ankerst, Ph.D. (University of Texas Health Science Center at San Antonio and Technical University of Munich, Germany).

The research was supported in part by the John S. Dunn Research Foundation.