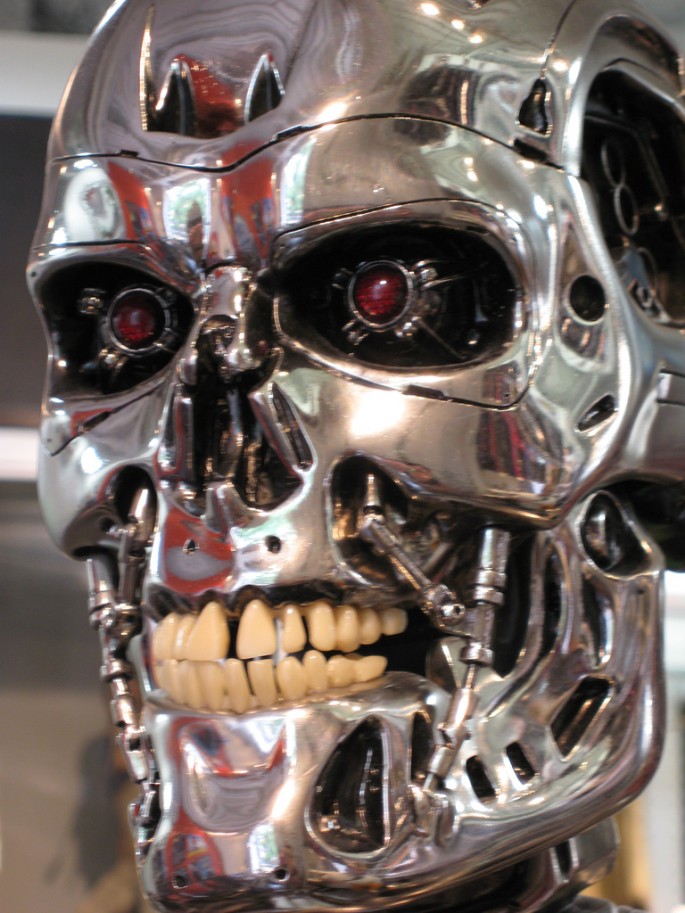

According to an artificial intelligence (AI) expert, the ongoing technological development of lethal autonomous weapons systems (LAWS) that works by selecting targets without commands from humans can create a grave violation to the fundamental principles of human dignity.

This ethical issue is explored by Stuart Russell who is a professor of computer science from the University of California, Berkeley, where it has been an ongoing dilemma between AI and robotics communities to develop and support or to oppose the emergence of these highly intelligent, autonomous systems.

Russell explains this by saying that the LAWS system can violate fundamental principles of human dignity. This can occur when this freedom of choice is given to the machines, allowing them to choose whom to kill. This will be evident when they are given the task to eliminate someone who might be exhibiting "threatening behavior".

The new LAWS can include anything from armed quadcopters to self driving tanks that possess the ability to track down and eliminate potential enemies.

This technology has the ability to transform modern warfare where some experts describe LAWS as the third revolution in warfare following nuclear weapons.

However, the future is right around the corner where LAWS is just two programs away since the U.S. Defense Advanced Research Projects Agency (DARPA) is now planning to use such automated systems.

To date, there are no specific international provisions or laws that are observed on any humanitarian laws for autonomous military weapons but the United Nations has already addressed this issue with numerous discussions about the implications of LAWS where they are also still in the process until laws are passed about this type of AI technology.

Russell also provided expert advice and testified at the UN's Convention on Certain Conventional Weapons (CCW) last April where different countries had opposing views about what kind of measures should be taken with this military AI.

Germany was adamant and revealed that the nation will not accept that the decision of life over death will be governed and decided by an autonomous robotic system. Although the UK, US and Israel believe that an international treaty for LAWS is unnecessary.

This study is published in the journal, Nature.