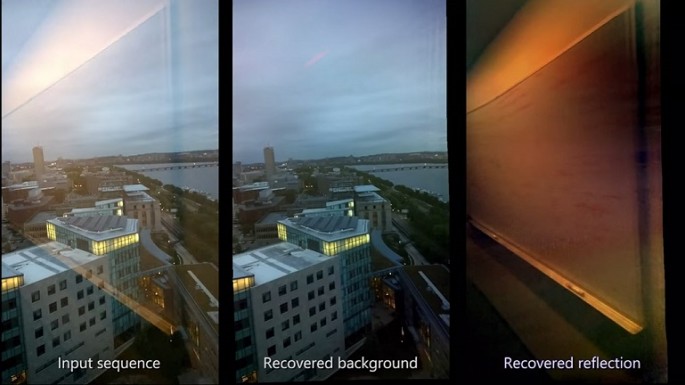

Google's auto-correction technology will improve digital camera users' photo capturing and sharing experience, by removing reflections such as those in windows, and foreground obstructions including water droplets and cyclone fences. The tech giant has teamed up with the Massachusetts Institute of Technology (MIT) to develop the new technology that improves image quality.

Doctored images might retain a portion of the reflected objects. However, it is still a notable improvement from the original picture.

Another plus of the new tech is that it is totally automatic. This differs from many object-removal features in smartphone cameras as it requires nothing to select.

For the technology to work, 5 to 7 multiple frames must be captured at different angles. This process resembles the process of taking a panorama picture.

The camera's software compares data points to determine how the image should look, and then merges them into one photograph minus the reflections and obstructions, according to Tech News Daily. A few seconds of snapping photos is needed.

Google and MIT might be able to tweak the auto-correction application so it becomes seamless, if it can capture the footage needed in a couple of seconds. It could continuously buffer a little bit of the video in its memory. Then then when the shutter button is pressed, the algorithm could use the buffered frames to create a photo minus the reflections and foreground obstructions, according to Android Police.

Although there has been no word from Google, the new application could be used for Android and Google Photos. However, it might just be released for cloud-based services.

The new camera innovation will be displayed at the Siggraph 2015 conference. The event will take place later this month.