Given the pace of how technology is changing and developing these days, what can only be considered as science fiction in the past could become a reality in the next decade.

The artificial intelligence or AI is projected to balloon to a $70 billion industry by 2020, according to an IDC research, cited by the Bank of America as reported by Fortune. That is a significant milestone coming from the $8.2 billion industry value in 2013.

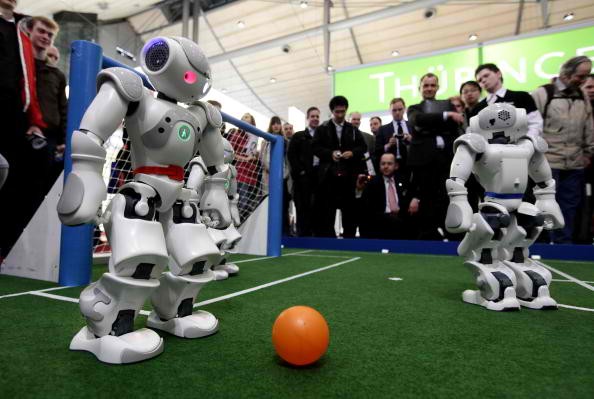

AI is already being used in data analytics and web search systems, and driverless cars and service robots are also under development. With that, the future where AI will be changing people's everyday life doesn't seem that distant.

Speaking to a crowd of tech leaders at a conference hosted last week by Recode, an online publication, Tesla Motors and SpaceX CEO Elon Musk said that AI and machines have the potential to be so sophisticated that humans may need to be injected with "neural laces" that would link them to the computers so they could keep up with them. Tesla has been heavily investing in artificial intelligence research. In December last year, it is one of the companies that donated $1 billion to OpenAI, a nonprofit artificial intelligence research center.

Alphabet's Google Chief Executive Sundar Pichai likewise believes in the "huge potential" of AI, the publication also reported. Google was the first to develop voice recognition software using the concept of "deep neural networks" in technology. Its driverless car is currently undergoing series of tests.

Meanwhile, AIs should not take over everything from humans. Humans should still be in control. For one, things could get out of control and that would spell big trouble to the human race. This is why some researchers want something like a "big red button" that would interrupt AI programs should things get awry, The Verge reported.

"If such an agent is operating in real-time under human supervision, now and then it may be necessary for a human operator to press the big red button to prevent the agent from continuing a harmful sequence of actions - harmful either for the agent or for the environment - and lead the agent into a safer situation," reads the study by Google-owned AI lab DeepMind and the University of Oxford in 2014.